Vehicles are complex machines that rely on an intricate combination of mechanical components, electronics, and software to work properly. Quality Testing is key for making them reliable and safe. Automotive testing methods, tools and strategies reflect the complexity of the system to be tested: They range from component-level testing using Hardware-in-the-Loop (HiL) or Software-in-the-Loop (SiL) methods to system-level track and fleet testing. Performance and durability in automotive are tested across different overlapping disciplines such as

- Noise, Vibration, Harshness (NVH)

- Acoustics

- Powertrain

- Electrical and Electronic (E/E) integration

- Advanced Driver Assistance Systems (ADAS)

- Passive Safety

- Electromagnetic Vulnerability (EMV)

Testing efficiently is key for cutting down development time. This is especially important as development cycles become shorter and over-the-air (OTA) updates allow a continuous improvement of vehicles.

There are many ways to shorten testing times. One possibility is to rely more on virtual validation (simulation data). Another is using clever methods to accelerate long-running durability tests. In any case, it is always important to understand the generated data efficiently and thoroughly. A lot of time can be saved by getting the data analysis part right: By finding critical errors quickly, by unmasking holes in the test coverage early or by uncovering important system-level insights with relevant stakeholders.

AI in automotive testing is quickly becoming an important tool for testing automation and data analysis. Over the last couple of years, deep learning has fundamentally changed the analysis of image, audio and time series data. More recently, Large Language Models (LLMs) like ChatGPT offer additional capabilities: Not only in terms of understanding and generating text, but also as general assistants that can use different tools in complex agentic workflows. These artificial intelligence (AI) techniques can make automotive testing data analytics more efficient, more reliable and more accessible.

In the following, we summarize five key use cases for AI in automotive testing data analysis. These applications strike a good balance between value add in terms of ROI, technical complexity and user acceptance.

Automated result assessment

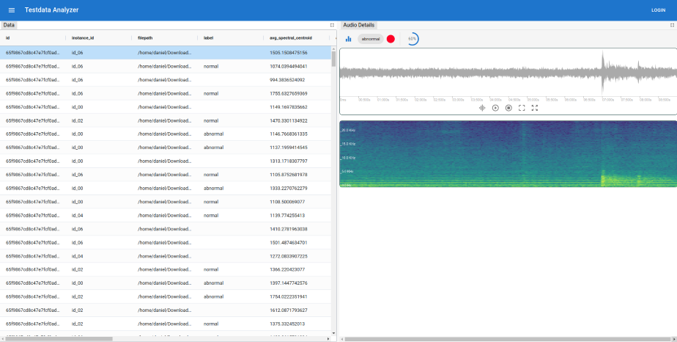

Often, automotive test data results can be assessed with simple rules such as thresholding. However, sometimes a lot of individual experience and manual work is required to analyze the testing data. A good example is acoustic testing: In many scenarios including track and end-of-line (EOL) testing, critical samples must be judged by experienced engineers. This is because classical signal processing techniques cannot be used to automatically capture the complex differences between different sounds.

In contrast, AI techniques like deep learning can identify erroneous samples or critical disturbances in the measurement signal. This event detection can be done in a supervised way, for example by training a neural network on labeled data. Check out blog post on Training an acoustic event detection system using for more information. We have found that this can both drastically cut down the time required for creating testing reports while enhancing the reproducibility at the same time.

Learn how to avoid the biggest mistakes in ML-based acoustings in our whitepaper

We have identified three core success factors for automating test result analysis in automotive with AI: First, building an AI solution comes with significant effort especially in data quality. So, the business case must be big enough to justify the cost. Furthermore, we have seen several times that the biggest bottleneck in such a system is to provide high-quality training data. Leveraging self-supervised learning and active learning strategies can help to significantly speed up the data annotation process. Finally, the performance and acceptance of the final solution heavily depends on the UI. In the end, AI in automotive testing often is not about the model being a few percentage points better. It is much more about building convenience and trust through a well-thought-out UI and explainable AI.

Fig. 1: Building an efficient UI and using explainable AI strategies is a big success factor for ML-based acoustic analysis

Fig. 1: Building an efficient UI and using explainable AI strategies is a big success factor for ML-based acoustic analysis

Faster root cause analysis

Sometimes, analyzing automotive testing data is detective work: In these cases, it takes a lot of time to get to the bottom of a problem. For fleet data, engineers often use pre-configured dashboards that data experts build using classical business intelligence tools (e.g. PowerBI, Apache Superset) on cloud infrastructure (data lakehouse). One disadvantage of this approach is the fact, that such dashboards are not flexible enough for many data exploration / root cause analysis workflows. Furthermore, they also lack the ability to analyze the raw data. That is why desktop-based engineering tools (e.g. Vector CANoe, NI Diadem) are used for in-depth analysis or tracing. Engineers could save a lot of time, if they were to access complex fleet testing data in an interactive, unconstrained way.

The current generation of LLMs is a big enabler for this scenario. As a testing data AI assistant, LLMs can serve as a personal data analyst for test engineers. In this role, such an AI assistant can help with two things: They can turn complex natural language queries into SQL to run the query on a database. Additionally, they can help to create interactive visualizations for the data. From our experience, it is crucial to get the data description in the model context right. We’ve also found that this use case works well with smaller open source LLMs. This is especially true when using an agent-based system. This is important as this makes AI in automotive testing feasible to use the approach even on internal and confidential data.

Fig. 2: LLMs can translate natural language queries into SQL so domain experts can talk to their data.

Fig. 2: LLMs can translate natural language queries into SQL so domain experts can talk to their data.

Writing assistant for test specifications and reports

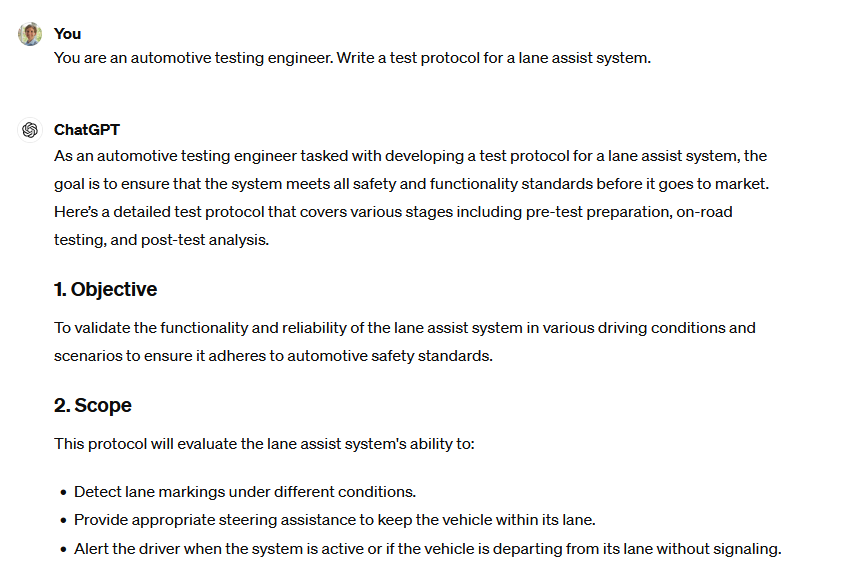

Automotive test engineers spent a lot of time creating, reviewing, and comparing documents. Automating these tasks has a long history in testing. Most reports are generated from a template, for example. Modern AI can take these efforts to a new level: Instead of just following static templates, modern LLMs can write and compare dynamic, context-dependent specifications and reports. AI-assisted test case generation does not only save time, but it can help to speed-up the overall process and to ensure a more complete test coverage.

While ChatGPT can already generate simple test cases out of the box (see Fig. 3), it only has a superficial understanding of the automotive testing domain. For the model to be actually useful, it has to be adapted to a company-specific database. While this can be done using model fine-tuning, the training step is costly. In a first step, few-shot learning through prompt engineering and retrieval augmented generation techniques can be used to generate meaningful specifications. It might be helpful to use constraining methods on the LLM’s output to make sure it is formally correct.

Fig. 3: Simple test case generation with ChatGPT. LLMs have to be adapted using fine tuning or RAG in order to be useful in real applications.

Fig. 3: Simple test case generation with ChatGPT. LLMs have to be adapted using fine tuning or RAG in order to be useful in real applications.

Breaking down data silos

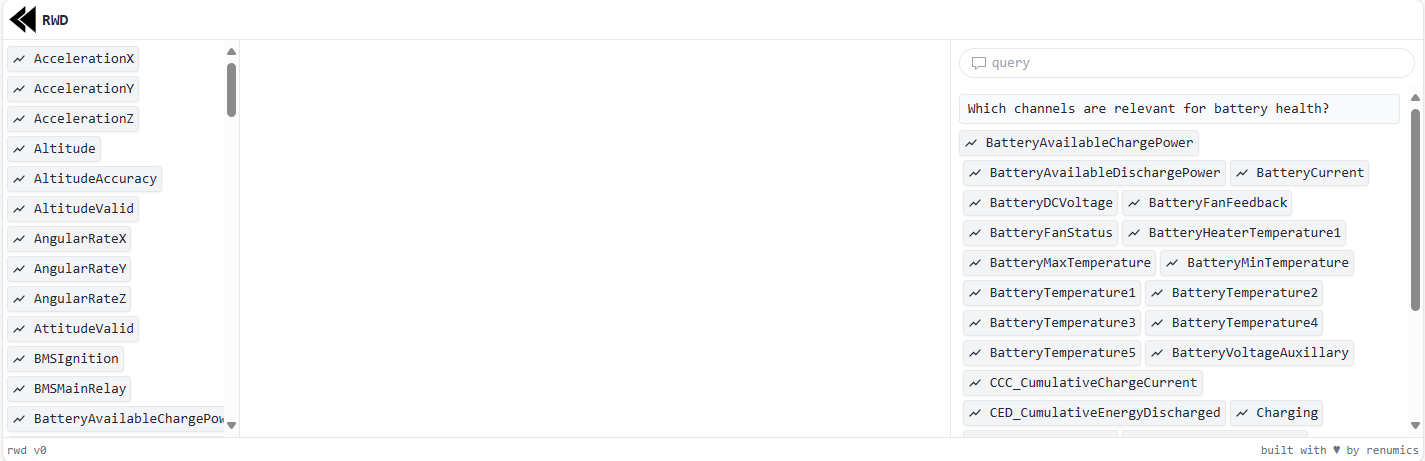

Automotive testing is a heterogeneous domain that spans many different disciplines, stakeholders, IT systems and data formats. Just looking at the number of data formats a typical OEM must deal with is mind-boggling. Breaking down these data silos enables a holistic approach to analyzing testing data, thus allowing large time savings and better development workflows. Standardizing data formats has already come a long way and there is already a shift happening towards open standards (e.g. ASAM MDF, ODS). However, it remains a challenge to properly describe the semantics of the data, especially for legacy systems.

Getting the data semantics right is not only important for classical data analytics workflows, it is also an absolute necessity when using AI data assistants. Fortunately, these assistants can not only consume data catalogs, but LLMs are also great at creating them. They can inject fundamental domain understanding (see Fig. 4) to generate meaningful dataset descriptions.

Fig. 4: LLMs can contextualize data by using learned domain knowledge.

Fig. 4: LLMs can contextualize data by using learned domain knowledge.

Anomaly detection

Stuff goes wrong all the time. And the faster mistakes are caught, the better (and cheaper!). However, monitoring automotive testing data through dashboards and reports is time consuming. And this only works well for known error sources. Catching unknown errors is especially challenging. Anomaly detection algorithms allow to do exactly that: Starting from a known normal state of operation, they can detect deviations in the data.

Technically, there are many different ways to implement anomaly detection: Outlier detection can be run on domain-specific features is probably the most straight forward one. For track testing it can make sense to use LSTM (Long Short Term Memory)-based forecasts as a baseline for the normal state. Alternatively, deep learning based autoencoders offer a very general, but powerful approach to anomaly detection. Additionally it is important to have a good understanding of the data and enrich the data with AI-generated features.

When dealing with an anomaly detection scenario, domain knowledge must be injected into the algorithm in order to constrain the operational domain. Two typical ways to do this is to select the multivariate channels that should be monitored and to select appropriate golden runs that serve as the reference state. Even with such constrains in place, operators can still be overwhelmed with false positive detections. From our experience we recommend two things to get started: First, build a use case that already benefits from finding known errors. This gives you a better entry point. Then, make sure you have a UI in place that allows handling detections very efficiently. In the beginning, this is much more important than a more accurate model.

Conclusions

AI has already revolutionized image and text processing and is currently doing the same with other modalities such as audio and time series signals. Consequently, AI is becoming increasingly important in automotive testing data analysis. In addition to classical use cases like automated result assessment and anomaly detection, the current generation of LLMs facilitate the creation of a holistic testing data assistant. Such an assistant can help to democratize data access for faster root cause analysis and serve as a writing and reading aid for testing-related documents.